Positive Comments: Rejecting the Use of AI is a Sober Resistance to Technological Alienation, Guarding the Unique Value of Human Labor and Spirit

In the current era when AI is sweeping across the globe as the “synonym of the future,” there is still a group of people choosing to swim against the tide. Some, like writers Jess and Amy, insist on creating with the strokes of “real people”; others, like programmer Ben, refuse to use AI to replace the programming process; and there are also online writers like Liu Chang, who stick to the “clumsy” way of typing manually. This seemingly “counter – trend” choice is actually a sober resistance to technological alienation, containing a triple defense of labor rights, in – depth skills, and human values.

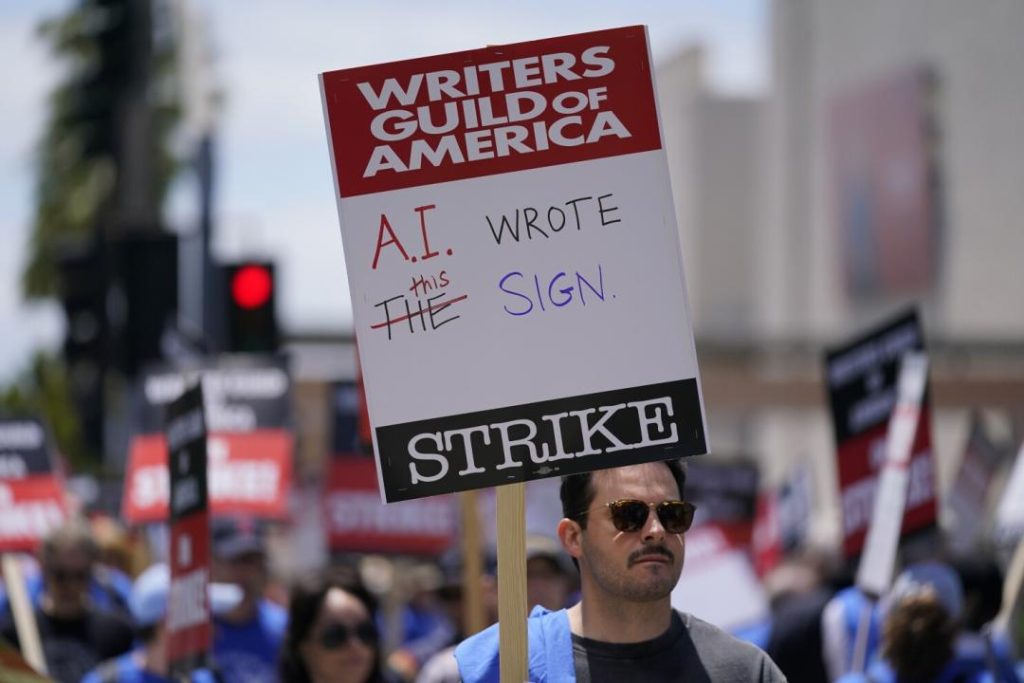

First of all, the group that refuses to use AI are the “night watchmen” of labor rights in the technological era. As mentioned in the news, the strikes of Hollywood screenwriters and actors, and Ben’s criticism of “the large – model being trained based on stolen labor” essentially point to a core contradiction: the “intelligence” of AI is built on a vast amount of human creative achievements, but the creators have not received reasonable rewards and may even be replaced by AI – generated content. This closed – loop of “theft – training – feedback” exposes the lack of intellectual property protection in the current development of AI. The act of refusing to use AI is essentially a silent protest against “technological exploitation.” It reminds the industry that technological progress should not come at the expense of the rights and interests of creators. If we allow AI to “freely use” human labor results without restraint, it will ultimately destroy the foundation of the creative ecosystem. As Ben said, “The large – model is built on stolen labor.” If this moral flaw is not corrected, the “intelligence” of AI will always be shrouded in “original sin.”

Secondly, the perseverance of refusing to use AI is a warning against the risk of “skill – removal,” guarding the depth and uniqueness of human skills. Research from MIT shows that relying on AI to complete tasks may reduce brain activity; short – video practitioners may lose their independent creative ability due to long – term use of AI to write scripts; college students who use AI to write papers cannot understand the content… These cases reveal a deep – seated crisis: when AI becomes a “universal tool,” humans may change from “the subject who masters skills” to “the intermediary who inputs prompt words.” Ben’s worry is not unfounded. If programmers rely on AI to write code, they may no longer understand the algorithm logic deeply; if writers rely on AI to generate content, they may lose their sensitivity to words and critical thinking. Refusing to use AI essentially defends the traditional path of “mastering skills through learning and practice.” This path is slow, but it allows people to truly “understand” rather than just “use” knowledge. Just as Ginny’s father warned in “Harry Potter”: “Never trust anything that can think for itself if you can’t see where it keeps its brain.” If humans give up the ability to “think for themselves,” they will eventually become the “puppets” of AI.

Finally, the group that refuses to use AI interprets the irreplaceability of the “creative process” and “human emotions” with their actions. For Jess and Amy, the value of “real people writing in a meaningful way” far exceeds the efficiency of AI – generated content; for Xu Mei, the “process of thinking and expressing” is the core of the joy of creation, just as hiking up a mountain can make one experience the beauty of nature better than taking a cable car. For Liu Chang, “art is the expression of human thought,” and the content generated by AI lacks intention and context, unable to replace the emotional interaction between people. These choices are not “conservative” but a counter – reaction to “instrumental rationality.” Technology can improve efficiency, but it cannot replace the subjective experience of humans in creation and communication. When more and more people are used to “quickly creating” content with AI and using AI to answer their friends’ confessions, the perseverance of the refusers is like a “humanity lighthouse” in the technological torrent, reminding us that technology is a means, not an end; human emotions, creativity, and uniqueness are the foundation of civilization.

Negative Comments: The Realistic Dilemma of Refusing to Use AI – Individual Resistance Can Hardly Reverse the Technological Trend, and Excessive Rejection May Miss Development Opportunities

Although the group that refuses to use AI has profound rationality, it is undeniable that this choice faces multiple challenges in reality. The irreversibility of technological development, the pressure of market efficiency, and the risk of individuals being out of touch with the industry make the perseverance of “refusing AI” often fall into a “doomed – to – fail battle,” and may even miss development opportunities due to excessive rejection of technology.

Firstly, the irreversibility of technological development makes it difficult for individual resistance to change the industry trend. Historically, the Luddites’ act of smashing textile machines failed to stop the Industrial Revolution, the group that refused the Internet was eventually marginalized in the information age, and the voices against Douyin did not prevent the popularization of short – videos. As one of the most disruptive technologies at present, the penetration of AI has changed from an “optional tool” to an “industry standard.” As mentioned in the news, even self – media practitioners who initially resisted AI have started to use AI to search for information and polish sentences due to efficiency requirements; although Liu Chang insists on typing manually, his peers are using AI to update several novels simultaneously. In this trend, an individual’s refusal may become an “isolated island.” When industry rules, user needs, and competitive pressure all lean towards AI, simply “not using” may lead to a decrease in the spread of works and a reduction in income (as Jess and Amy mentioned, “monthly income has decreased”), and may even result in being eliminated by the market. The “snowball effect” of technology determines that if refusers want to survive continuously, they either have to adjust their strategies or accept marginalization.

Secondly, excessive rejection of AI may lead to ignoring its positive value as an “auxiliary tool,” limiting the innovation space of individuals and enterprises. AI is not a terrifying monster. Its core is to “enhance human abilities” rather than “replace humans.” For example, AI can quickly organize materials, assist in inspiring inspiration, and optimize the creative process. If these functions are reasonably utilized, they can enable creators to focus more on the core aspects (such as logical organization and emotional expression). Xu Mei’s choice in the news is quite representative. She uses AI to complete 80% of the basic tasks at work in the company but insists on manual writing in her personal creation. This balance between “using it as a tool” and “retaining subjectivity” may be more sustainable than “completely refusing.” If one completely rejects AI due to negative concerns, they may miss the opportunity of technological empowerment. For example, AI can help small teams reduce content production costs and expand the scope of dissemination; it can assist developers in quickly verifying ideas and accelerating product iteration. Excessive refusal of AI may put individuals or enterprises at a disadvantage in the efficiency competition.

Thirdly, if the group that refuses to use AI lacks “constructive solutions,” they may fall into the dilemma of “opposing for the sake of opposition.” The current problems in AI development (such as unclear intellectual property rights and the risk of skill – removal) require systematic solutions rather than simply “not using.” For example, the Hollywood strike finally restricted the abuse of AI through a labor – management agreement rather than a full – scale ban on AI; Ben’s criticism of “the large – model being based on stolen labor” requires promoting the standardization of the source of training data by law (such as requiring model companies to pay reasonable compensation to creators), rather than having individual developers “vote with their feet.” If refusers only stay in the stance of “not using” and do not participate in rule – making and standard – setting, their resistance may become an emotional outburst and fail to truly promote industry progress. The balance between technology and humanity requires “critical use” rather than “complete rejection.”

Suggestions for Entrepreneurs: Find a Balance between Technology and Humanity and Be a “Sober AI User”

For entrepreneurs, AI is both an opportunity and a challenge. Facing the two – pole choices of “refusing AI” and “blindly relying on AI,” a more rational path is to take the balance between “instrumental rationality” and “value rationality” as the core and be a “sober AI user.” Specifically, it can be started from the following three aspects:

Make Good Use of AI to Improve Efficiency, but Retain the “Subjectivity” of Core Skills: Entrepreneurs should clearly define the “auxiliary tool” position of AI and use it for tasks with high repetition and strong standardization (such as material organization, basic code generation, and text polishing), so as to free up the team’s energy to focus on the core innovation aspects (such as understanding user needs, designing logical frameworks, and expressing emotions). At the same time, they need to be vigilant against the risk of “skill – removal.” For example, if a technical team completely relies on AI to write code, they may lose the in – depth understanding of algorithm logic; if a content team relies on AI to generate content, they may lose the sensitive capture of user emotions. Entrepreneurs need to ensure through mechanisms such as training and assessment that the team’s core skills (such as technical depth, creative ability, and critical thinking) are not replaced by AI.

Pay Attention to Intellectual Property Rights and Ethics and Establish “Responsible AI Use” Standards: The “training data” and “generated content” of AI involve complex intellectual property issues. When using AI tools, entrepreneurs need to clarify the legality of data sources (such as avoiding using unauthorized creative content to train models) and mark the traces of AI assistance in the generated content (such as the requirement in Hollywood that “AI – synthesized actors need to inform the union”). In addition, they need to actively participate in industry standard – setting (such as promoting rules on “copyright ownership of AI – generated content” and “creator compensation mechanisms”) to avoid damaging the industry ecosystem due to the abuse of technology.

Integrate “Human Values” into Product Design to Avoid Technological Alienation: Entrepreneurs need to think: “Is my product using AI to enhance human subjectivity rather than weakening it?” For example, educational products can use AI to provide personalized learning paths, but they need to retain in – depth interaction between teachers and students; content products can use AI to optimize recommendation algorithms, but they need to prevent users from being trapped in the “information cocoon”; social products can use AI to assist communication, but they need to encourage users to express real emotions rather than relying on “AI – generated words.” By embedding “human values” into the product logic, entrepreneurs can not only seize the efficiency dividend of AI but also avoid falling into the trap of “technological alienation.”

Conclusion: The group that refuses to use AI has rung a “humanity alarm bell” for technological development with their perseverance, while the mission of entrepreneurs is to find a balance between “efficiency” and “humanity” in the technological wave. As Liu Chang said, “We cannot refuse AI, but we can choose to retain creativity, critical thinking, and human subjectivity.” This choice is not only a respect for technology but also a guardianship of human civilization.

- Startup Commentary”Three post-2005 entrepreneurs are reported to have secured a new financing of 350 million yuan.”

- Startup Commentary”Retired and Reemployed: I Became Everyone’s “Shared Grandma””

- Startup Commentary”YuJian XiaoMian Breaks Issue Price on Listing: Where Lies the Difficulty for Chinese Noodle Restaurants to Break Through in the Market? “

- Startup Commentary”Adjusting Permissions of Doubao Mobile Assistant: AI Phones Are a Flood, but Not a Beast”

- Startup Commentary”Moutai’s Self – rescue and Long – term Concerns”