Positive Comments: The AI Computing Power Competition Accelerates Technological Iteration and Remodels the Industrial Ecosystem Pattern

Currently, the “arms race” among global tech giants in AI computing power infrastructure, despite sparking debates about overheating, has an undeniable positive impact on technological progress and the industrial ecosystem. This competition is essentially a battle for “dominance in future technologies,” and its positive significance is mainly reflected in three aspects:

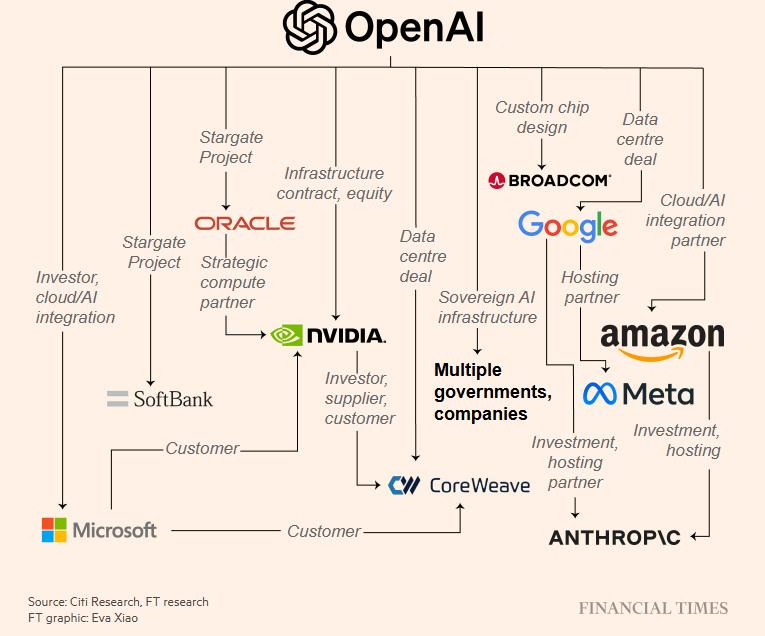

First, massive investment directly drives the leap – forward development of AI computing power technology. Actions such as OpenAI signing a trillion – dollar computing power contract, Meta planning to invest $60 billion in building data centers before 2028, and Microsoft purchasing nearly 500,000 NVIDIA H100 chips are essentially using capital leverage to drive the “extraordinary growth” of computing power supply. This explosive expansion on the demand side forces chip manufacturers (such as NVIDIA and AMD) to accelerate the R & D of higher – performance GPUs (such as NVIDIA GB300 and AMD MI450) and promotes the upgrade of data center architectures towards integration and high – efficiency, such as “rack – type supercomputers.” For example, AMD’s MI450 chip customized for OpenAI has to compete with NVIDIA’s Vera Rubin chip launched during the same period. This “close – range race” will directly accelerate the iteration speed of chip performance. According to public information, the computing performance of Vera Rubin is 3.3 times that of the current top – level Blackwell chip, and AMD MI450 needs to reach this level among its peers. The sense of urgency for technological breakthrough will translate into an improvement in R & D efficiency.

Second, the large – scale release of computing power resources lays the foundation for the popularization of AI applications. The explosion of generative AI depends on the dual – wheel drive of “large models + large computing power.” The huge investments made by current giants in GPUs and data centers are essentially paving the way for the “democratization” of AI applications. For example, Oracle provides cloud computing power leasing services for OpenAI through the “Stargate” project. Although its current gross profit margin is only 14%, once the computing power cost decreases with the scale effect (such as the reduction of single – watt cost through future chip mass production and the improvement of data center energy efficiency ratio), cloud providers can open up low – cost computing power to small and medium – sized developers through the “small profit but large sales” model, promoting the penetration of AI in vertical fields such as healthcare, education, and industry. The multi – billion – dollar cloud computing agreements signed by Meta with Google and Oracle are also reducing their own AI training costs through resource integration and finally transmitting the “computing power dividend” to their C – end products such as social platforms and the metaverse, accelerating the popularization of AI functions.

Third, the reshaping of the market competition pattern activates the innovation vitality of the industrial chain. For a long time, NVIDIA has formed a “monopoly advantage” with an 80% – 95% share of the AI chip market. Customers have no bargaining power in chip procurement, and technological iteration depends on the pace of a single manufacturer. However, actions such as OpenAI’s cooperation with AMD (deploying 6GW AMD GPUs), Meta’s $14 billion agreement with CoreWeave (introducing a third – party computing power supplier), and Microsoft’s leasing of NeoCloud servers are breaking this pattern. By binding with OpenAI, AMD has obtained a “strategic – level customer,” which can not only quickly increase its data center business revenue (expected to exceed hundreds of billions of dollars in the next few years) but also optimize chip design with the help of OpenAI’s technical feedback. Small and medium – sized computing power service providers (such as CoreWeave and NeoCloud) are exploring a differentiated model of “computing power leasing + customized services” by undertaking the demands of giants. This “multi – polar” competition will promote comprehensive innovation in aspects such as chip performance, computing power service models, and data center energy efficiency, ultimately lowering the threshold for the entire industry to use computing power.

Negative Comments: Overheated Investment Hides Three Risks, and Profitability and Sustainability Are Doubtful

Although the AI computing power competition is of great significance at the technological level, the investment logic, profit model, and potential risks behind it also deserve our attention. The “irrational prosperity” in the current market has shown three major hidden dangers:

First, circular financing and profit inversion may trigger a capital chain crisis. Transactions such as “NVIDIA investing in OpenAI to buy its chips” and “AMD exchanging warrants for OpenAI’s procurement” mentioned in the news are essentially a circular model of “suppliers reverse – financing customers.” For example, NVIDIA promised to invest $100 billion in OpenAI in the next decade for the latter to buy its chips. OpenAI recovers funds through leasing chips or cloud services, but its annual loss has reached $10 billion, and its revenue scale is far smaller than the computing power contract scale (the trillion – dollar computing power contract signed this year far exceeds its revenue). This “borrowing new to pay old” model depends on OpenAI’s future profit expectations. If the commercialization speed of its generative AI products (such as ChatGPT) fails to meet expectations (for example, the user payment rate is low and the enterprise customer budget shrinks), or the chip price drops significantly due to technological iteration (resulting in the “high – cost computing power” of existing contracts losing competitiveness), OpenAI may face the dilemma of “revenue being unable to cover computing power costs,” which will in turn affect its payment ability to suppliers and ultimately harm the financial health of upstream enterprises such as NVIDIA and AMD. Similar risks have emerged in Oracle’s cloud business: the gross profit margin of its NVIDIA cloud service is only 14%, far lower than the overall level of 70%. If the computing power leasing price fails to rise with the increase in scale, or the chip procurement cost remains high due to supply shortages, Oracle’s cloud business may become a “cash – flow – consuming” black hole.

Second, capital misallocation may lead to resource waste and repeat the mistakes of the Internet bubble. The current investment of technology giants in AI computing power has far exceeded historical levels: Meta plans to have a capital expenditure of $66 – 72 billion in 2025 (mainly for AI data centers), and the combined capital expenditure of four companies including Amazon, Microsoft, and Google is expected to reach $320 billion in 2025 (exceeding Finland’s GDP). This “cost – ignoring” investment is highly similar to the optical cable laying wave during the Internet bubble in 2000. At that time, enterprises blindly laid optical cables to seize the opportunity in Internet infrastructure, resulting in a loss of $2 trillion for shareholders and 500,000 job losses due to insufficient demand. Although the long – term potential of AI is promising (as Lisa Su said, a “ten – year super cycle”), it is still doubtful whether the short – term demand can support such a large – scale computing power supply. Currently, the main applications of generative AI are concentrated in low – value – added scenarios such as chatting and content generation. The implementation of high – value scenarios such as healthcare and industry still needs to solve problems such as data compliance and model accuracy. If the growth rate of computing power supply far exceeds the actual demand growth rate, a large number of data centers and GPUs may face the risk of “idleness,” resulting in resource waste.

Third, the singleness of the technological path may suppress the diversity of innovation. The current excessive market dependence on GPUs (NVIDIA accounts for 90% of the training chip market) may cause the industry to ignore the exploration of other computing power paths. For example, ASIC (Application – Specific Integrated Circuit) is far more efficient than general – purpose GPUs in specific AI tasks (such as image recognition and voice processing), but OpenAI’s cooperation with Broadcom on ASIC (a $10 billion order) has been marginalized due to GPU transactions with AMD and NVIDIA. FPGA (Field – Programmable Gate Array) has the flexibility advantage in real – time inference scenarios, but market attention has been weakened due to the “performance halo” of GPUs. This tendency of “only believing in GPUs” may lead to the “path lock” of technological innovation. If a more efficient computing power architecture (such as quantum computing or neuromorphic chips) emerges in the future, the huge current investments based on GPUs may face the risk of “technological obsolescence.” In addition, the dominance of a single supplier (such as NVIDIA) may also cause “supply chain security” problems. If GPU supply is interrupted due to geopolitical issues or production capacity fluctuations, enterprises relying on its computing power (such as OpenAI and Microsoft) will face the risk of business stagnation.

Advice for Entrepreneurs: Rationally Layout Computing Power Resources, Focus on Technology Implementation and Risk Control

Facing the coexistence of “overheating” and “opportunities” in the AI computing power market, entrepreneurs need to maintain a clear understanding and adopt differentiated strategies in computing power layout, technology R & D, and commercialization paths:

- Avoid blindly following the “computing power arms race” and prioritize matching actual needs. Small and medium – sized entrepreneurs do not need to copy the model of giants “hoarding GPUs.” They can lease computing power on demand through cloud services (such as Oracle Cloud and Google Cloud) or choose more cost – effective AMD chips or domestic GPUs (such as Biren Technology and Days Intelligence) to reduce costs. At the same time, they need to choose the type of computing power according to their own model needs (training/inference, model scale). For example, lightweight models can use FPGAs or ASICs to avoid paying extra costs for “redundant performance.”

- Pay attention to the diversification of computing power and reduce supply chain risks. Avoid relying on a single chip supplier (such as NVIDIA). Entrepreneurs can disperse the risks of technological obsolescence and supply interruption by deploying a mix of GPUs (NVIDIA/AMD), ASICs (Broadcom/domestic manufacturers), and other types of computing power. For example, AI healthcare startups can customize ASICs for image recognition tasks to improve inference efficiency; intelligent driving companies can combine GPUs (for handling complex scenarios) with FPGAs (for real – time response) to optimize the computing power architecture.

- Focus on technology implementation and commercialization capabilities and avoid “pursuing computing power for the sake of computing power.” Computing power is the “fuel” for AI applications, but the ultimate value depends on the “engine driven by the fuel,” that is, the solutions for specific scenarios. Entrepreneurs need to first verify the feasibility of the business model (such as customer willingness to pay and repurchase rate) and then gradually expand computing power investment according to demand. For example, AI tools in the education field can first meet the initial user needs through cloud services and then consider building their own small – scale data centers or customizing computing power equipment after the scale of paying users stabilizes.

- Be vigilant against the bubble risk and maintain financial health. When financing, entrepreneurs need to clarify the “return period” of computing power investment and avoid accepting aggressive terms such as “gambling agreements.” During operation, they need to monitor the proportion of computing power cost (such as controlling it below 30% of revenue). If they find that the growth rate of cost far exceeds the growth rate of revenue, they need to adjust the technology route in a timely manner (such as optimizing model efficiency or switching to lower – cost computing power). In addition, they can share costs and improve resource utilization through the “computing power sharing” model (co – building data centers with entrepreneurs in the same industry).

In summary, the current AI computing power competition is both a “catalyst” for technological progress and a “warning light” for overheated capital. Entrepreneurs need to seize the computing power dividend while maintaining a sense of awe of risks. By adhering to the principles of “demand – driven, diversified layout, and stable operation,” they can move more steadily and further in the AI wave.

- Startup Commentary”Three post-2005 entrepreneurs are reported to have secured a new financing of 350 million yuan.”

- Startup Commentary”Retired and Reemployed: I Became Everyone’s “Shared Grandma””

- Startup Commentary”YuJian XiaoMian Breaks Issue Price on Listing: Where Lies the Difficulty for Chinese Noodle Restaurants to Break Through in the Market? “

- Startup Commentary”Adjusting Permissions of Doubao Mobile Assistant: AI Phones Are a Flood, but Not a Beast”

- Startup Commentary”Moutai’s Self – rescue and Long – term Concerns”